- Enabling the sharing of personal information by default in child directed services, including personal information from teen users, may present risk.

- Compliance needs to apply retroactively.

- In assessing whether a service is directed to children, the FTC looks beyond the four-corners of the product.

- Training matters.

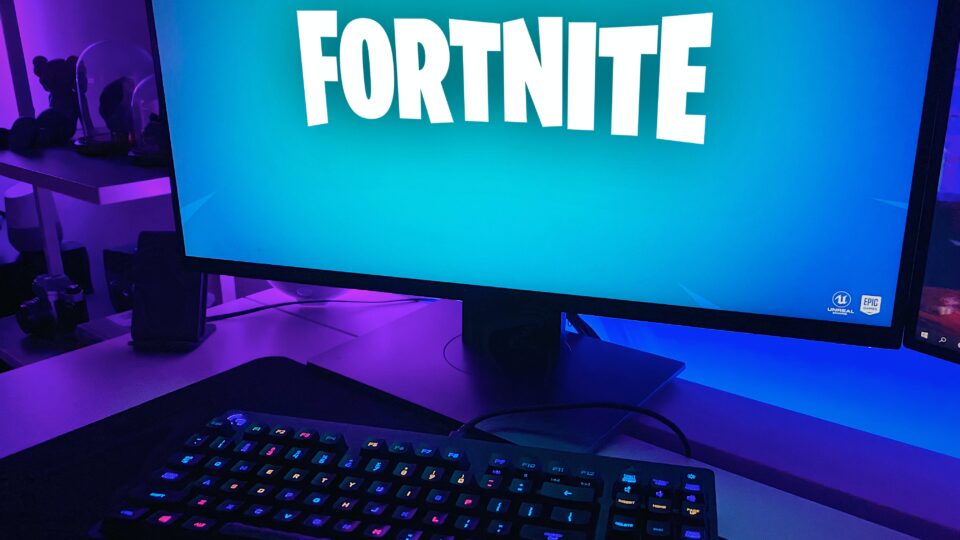

On December 19, 2022, the Federal Trade Commission (“FTC”) announced the largest-ever penalties for violations of the Children’s Online Privacy Protection Act (“COPPA”) and unfair use of dark patterns in a pair of settlements with Epic Games (“Epic”), the creators of the videogame Fortnite. Under the settlement, Epic will pay over half a billion dollars. Importantly, the case signals that companies should evaluate the impact their privacy practices may have on not only child users, but also teen users, or they may face federal regulators.

Epic will pay $275 million for allegedly violating COPPA by collecting personal information from children under the age of 13 without notifying their parents or obtaining verifiable parental consent. This settlement also resolves claims that Epic engaged in an unfair practice in violation of the FTC Act by enabling by-default settings that allowed children and teens to communicate in real-time with strangers who harassed and threatened them. In a first-of-its kind allegation and resulting injunctive relief, Epic must disable by-default in-game communications for children and teens and obtain affirmative express consent from a child’s parent or a teen to enable such features.

Epic will pay an additional $245 million for allegedly using so-called “dark patterns” to trick players into making purchases and inhibiting them from canceling or obtaining refunds, and for deactivating the accounts of players who disputed the charges.

COPPA & FTC Act Violations/Settlement

COPPA Violations

According to the complaint, Epic violated COPPA by providing a child directed service that knowingly collected personal information from children under 13 without notifying their parents and without first obtaining verifiable parental consent. The FTC found Fortnite is directed at children due to, among other things, the game’s use of cartoon and animated graphics, “build-and-create” game mechanics akin to childhood fort building, Epic’s licensing and highly successful marketing of Fortnite toys and merchandise to children, and partnering with celebrities and brands popular with children. The FTC also alleged that Epic had actual knowledge—in the form of user surveys, player support interactions, and marketing pitches—that it had collected personal information from specific users under 13.

The complaint further alleged that Epic required parents to jump through unreasonable hoops to verify their parental status before the company would delete their children’s personal information. For instance, Epic required parents to provide detailed and burdensome information, such as all IP addresses used to play Fortnite, the date the child’s Epic account was created, an invoice ID for an Epic purchase, the date of their child’s last Fortnite login, and their child’s original Epic account display name, among other things. If parents provided such information, Epic sometimes required parents to provide additional information such as a copy of the parent’s passport, identification card, or recent mortgage statement.

FTC Act Violations

The Commission also alleged that enabling by-default real-time voice and chat communications for children and teens constituted an unfair practice in violation of the FTC Act. The FTC alleged that child and teen users were matched with strangers for in-game communications, often resulting in bullying, threats, and harassment.

COPPA & FTC Act Settlement

The settlement requires Epic to:

- Pay a $275 million civil penalty;

- Delete personal information previously collected from children and teen players (unless Epic obtains parental consent to retain the data or the user identifies as 13 or older through a neutral age gate);

- Disable real-time voice and chat for children and teens unless the parents of users under 13 or teenage users (or their parents) provide affirmative express consent through a privacy setting;

- Maintain a comprehensive, COPPA-compliant privacy program; and

- Submit to third-party privacy assessments every two years for twenty years.

Dark Patterns Violations/Settlement

In a separate complaint, the FTC alleged that Epic used dark patterns to make it easier to make accidental purchases and harder to cancel purchases or request refunds. In particular, the complaint alleged that (i) players could easily purchase items with a single click whereas cancelling a purchase or requesting a refund required more prolonged actions or following convoluted, multi-step processes; (ii) Epic placed the purchase button where players were likely to accidentally click it, including by switching the purchase button with other buttons that players frequently pressed to interact with the game in other ways on other interfaces; (iii) Epic’s disclosures about how to cancel were insufficient; and (iv) Epic hid details about the process for requesting a refund and required consumers to complete unnecessary steps to submit the request (such as providing a reason for the refund).

The complaint also alleged that Epic deactivated the accounts of players who disputed unauthorized charges with their credit card companies, resulting in players losing their prior purchases without any refund from Epic.

Dark Patterns Settlement

The dark pattern settlement requires Epic to:

- Pay $245 million in refunds to reimburse players for such purchases;

- Obtain express, informed consent before charging a player for in-game purchases;

- Provide a mechanism for players to revoke consent to a charge at any time, which must be as simple as the mechanism to initiate the charge;

- Refrain from deactivating player accounts for disputing unauthorized charges; and

- Adhere to obligations related to compliance reporting, recordkeeping, and monitoring.

KEY TAKEAWAYS

While much of this action reiterates the lessons of past COPPA and other settlements, key new takeaways are:

- Enabling the sharing of personal information by default in child directed services, including personal information from teen users, may present risk.

The FTC has made clear it will evaluate whether default data sharing settings pose potential harm to child and teen users and consider whether such practices are unfair under the FTC Act. The FTC has expanded its enforcement beyond COPPA’s application to under 13-years-old to apply special considerations to the effects data sharing has upon teens and suggests the FTC will leverage its authority under the FTC Act to impose obligations similar to those recently enacted in California’s Age Appropriate Design Code (which takes effect in July of 2024). - Compliance needs to apply retroactively.

Over the course of the FTC’s multi-year investigation Epic did change some policies for Fortnite, but those changes were not applied to accounts that already belonged to known children. Epic must now delete data associated with those users or go back and get consent to maintain it. - In assessing whether a service is directed to children, the FTC looks beyond the four-corners of the product.

While prior COPPA cases have looked to merchandising and external surveys, Fortnite’s popularity made for a profusion of evidence that the game is used by minors. Nerf guns, action figures, and Halloween costumes made it easy for the FTC to allege that Epic was seeking to build a child-audience for Fortnite. - Training matters.

Epic’s internal communications demonstrated widespread knowledge that children were playing Fortnite. Companies that may encounter child data need to establish policies and procedures for employees and contractors to report and investigate possible child users. The marketing and development teams also need to be aware of how they position and grow the product to either avoid building for a child audience or facilitate compliance with respect to child and teen users.